Human-AI Collaborative Systems for DoD Applications

A major thrust of Dr. Banerjee’s lab is on developing intelligent Human-AI collaborative teams. This research area is a focus of his collaboration with the Department of Defense in general, and the Army Research Laboratory in particular. He has also extended the idea of applying human intelligence to human-assisted machine learning approaches for agricultural and accessibility applications.

AI will play a major role in augmenting cybersecurity operators. The primary metric of success for such human-AI teams is performance and efficiency, i.e., the human-AI team has higher performance when compared to a human-only team. The key to the success of such systems, however, is the AI’s adaptation to the human. Specifically, Dr. Banerjee’s lab has identified human emotional and cognitive states as properties that the AI must adapt to while working with humans. Unfortunately, state-of-the-art measures for human state and human trust in automation today primarily rely on self-report questionnaires or other rudimentary measures. Such questionnaires are not real-time, may be unreliable, and may likely affect naturalistic behavior that can bias our state measurements; hence, it is infeasible for an AI to modulate its response to the human using these measures. While a substantial amount of progress has been made in this area, human-AI teams have yet to be widely deployed outside of the laboratory, and research in this domain has been recognized as a priority by the National Artificial Intelligence Plan of 2019 . Our lab has been working with the US Army Research Laboratory to advance this area by examining the following hypothesis: physiological measures like heart rate variability acquired with wearables can infer human state such as stress, which can be used to modulate information presented to cybersecurity operators.

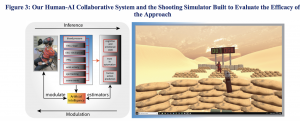

We adopt a model-based method to address the above problem. Our system uses real-time models that infer human state using physiological measures collected from wearables with behaviorally relevant design features. The AI that implements these models will modulate the data presented to the operator as a function of stress. Such a tool can be used in smart manufacturing environments to facilitate higher productivity, energy-efficiency, and higher precision in detecting cyber-threats. The final deliverable of this project is a system illustrated in Figure 4. The system uses real-time models that infer human-AI trust and human state using psychophysiological measures collected from wearable sensors (EEG, EKG, and activity). The AI that implements these model will serve the following purpose. It will modulate information and recommendations made to humans (or human teams) based on human-AI trust, human state, and application-specific scene information. We demonstrate this system using an example shooting simulation built-in virtual reality. The preliminary version of the shooting task has already been built in collaboration with the Army Research Lab, shown in Figure 3, and research using this testbed has led to several publications and research grants .